Samsung unveiled a trio of flagship Galaxy S20 smartphones this week. The older model received a camera with a resolution of 108 megapixels. Many people at the sight of such a figure will probably widen their eyes. And someone will give a squeamish look at their latest iPhone with 12 megapixels. And in vain. After all, the number of megapixels is not the main indicator. About what is really important, we tell below.

Let’s go in order. Photo resolution is the number of pixels on the canvas. A camera with a resolution of 48 megapixels makes the canvas with 48 million color cubes. A camera with 64 megapixels provides a canvas with 64 million points, and a sensor with 108 megapixels – 108 million.

The only benefit of the number of pixels is the number of objects that an image draws on the canvas. The latter affects the granularity. That is, in a 12-megapixel image, when approaching, you can see fewer small objects than in a 108 megapixel image.

So this is the most important thing?

Not. The most important thing in a mobile camera is the number of scenarios in which it will be useful. A lot of pixels is not equal to a lot of scenarios. The main factor affecting the flexibility of the camera is light. It is either a lot or a little. The more light, the better the photograph. “Better” means crisper, with good dynamic range and the right white balance. Therefore, multi-pixel cameras are highly dependent on the amount of light.

The more pixels, the smaller they are, as a rule. Is that bad. Imagine that a pixel is a small canvas into which photons, particles of light, crash. If the pixel is large, many photons fall into it. If small – not enough.

As a result, in perfect light, 108-megapixel shots will be uniquely good. But with a bad one – no. Because small pixels receive little or no light. The result of a light deficit is noise in the pictures. Noise is the granularity of photos, the presence of pixels of the wrong color.

In a 108-megapixel camera, pixels must be small. Because they need to be placed on the matrix of the mobile camera. The latter a priori cannot be big, because in a smartphone all components are packed very tightly.

That is, you just need a large matrix?

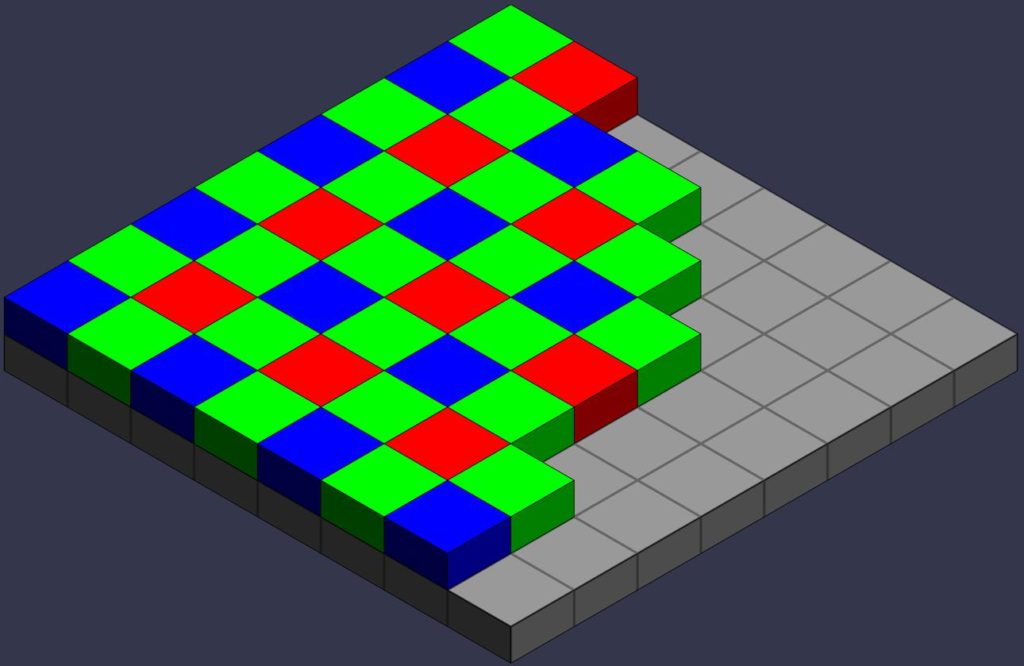

And (or) large pixels. What is a camera matrix? The matrix is a microcircuit with photosensitive photodiodes, pixels. A photodiode under the influence of light creates an electrical signal, which is subsequently converted into a digital signal. It enters the processor, which already sets the desired colors to the pixels, after which an image is obtained.

And how to determine the size of the sensor and pixel in the smartphone’s camera?

Oh, this is a non-trivial task. Because smartphone manufacturers prefer not to disclose these parameters if there is nothing to boast about. However, two years earlier, the situation in the flagship segment began to change for the better. Huawei with its P20 Pro and P30 Pro imposed competition on other vendors and in the size of the sensors.

Despite the taciturnity of brands in most cases, the size of the sensors can still be found. The fact is that today there are two suppliers of photomatrixes in the smartphone market – Samsung and Sony. And in the presentations of these components, developers reveal all the parameters, including the size of the matrix and pixel.

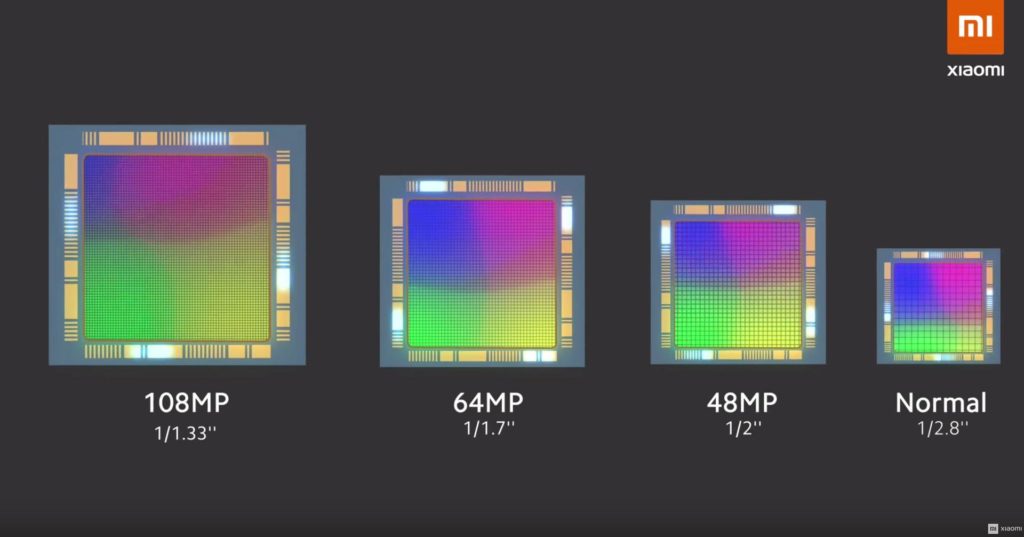

The size of the matrix is the diagonal of the photosensitive sensor, which is indicated in the so-called Vidicon inches. The latter are calculated by a special formula, without knowing which, in numerical values you can get confused quite simply. Be that as it may, we have translated for you in normal millimeters the diagonals of the most popular mobile matrices on the market.

If the inscription 48MP flaunts the Celestial Empire’s smartphone cover, it’s 90% likely to be a Sony IMX 586 camera. This sensor is used both in state employees like Redmi Note 7 Pro and in conditional flagships such as OnePlus 7. Its size is 1/2″, or approximately 8.4mm. The size of each of the 48 megapixels is 0.8 micrometers (microns).

Samsung ISOCELL Bright GW1 sensor occupied the entire niche of 64-megapixel sensors. It is mainly used in mid-range devices like Realme XT and Vivo NEX 3. And the physical size is already 1/1.72″, or about 9.8mm. The pixel size is the same as the Sony IMX 586, – 0, 8 microns.

Now let’s move on to Huawei. These guys use Sony matrices. The Mate 30 Pro has an IMX 608 sensor with a nominal resolution of 40 megapixels and a size of 1/1.54″, or about 12mm. The pixel size, according to various sources, is from 1.15 microns.

The iPhone 11 uses a classic 12-megapixel sensor from, most likely, Sony. Apple itself does not disclose this information. However, like Huawei. For them, the parsers solve the problem. Nevertheless, it is known that the size of the sensor is 1/2.55″, or about 6.7mm. Yes, the matrix is small, but the pixel size is 1.4 microns.

Now look at the Galaxy S20 sensor. It is called Samsung ISOCELL Bright HM1. The size is 1/1.33″, or about 12.7 mm. ISOCELL Bright HM1 is the largest mobile sensor in the world today. But! The true pixel size still remains small – 0.8 microns.

That is, the Samsung camera is in absentia bad?

Only in terms of physics. Although the physical parameters are important, the software that services the sensor is equally valuable. The software can be divided into two categories: primary image processing algorithms and post-processing algorithms.

The former are responsible for ensuring that the information that enters the sensor is simply put together in the correct image. The second is needed to improve the picture, overlay effects. So, now – about primary algorithms.

The most important two. The first is a Bayer filter. It is named after Bryce Bayer, the inventor of the algorithm. It is necessary for coloring the image obtained from the digital matrix. After all, by default, they collect a monochrome image from pixels that “caught” the light and which did not.

The Bayer filter takes a cell of four monochrome pixels (2×2) and paints one dot in red, one dot in blue and two in green. This filter is called RGGB (Red, Green, Green, Blue). Green dominates, because it is supposedly better and more pleasant for a person’s trichromatic vision. But this, apparently, is a convention, since there are other combinations. Huawei, for example, prefers RYYB (Red, Yellow, Yellow, Blue). So the pictures are warmer and lighter.

The second algorithm is responsible for dividing the source image into large or small pixels, depending on the shooting scenario. Remember the 0.8 micron pixel size? So, Samsung and Sony came up with programs that artificially increase pixels.

Koreans already have two – Tetracell and Nanocell. The first glues true 0.8 microns pixels into a large digital pixel of 1.6 microns. The second works similarly, but turns small true pixels with a 2.4 micron digit. The Japanese are so far only worn with the Quad Buyer algorithm, which, by analogy with Tetracell, turns 0.8 microns into 1.6 microns.

Because of these algorithms, all 40-, 48-, 64-, and 108-megapixel smartphones take photos by default with a resolution of 10 megapixels, 12 megapixels, 16 megapixels, and 27 megapixels, respectively.

Difficult. Just say what it all means to a simple user

This means that the pictures of all multi-pixel smartphones automatically undergo powerful digital processing at the initial stage of image formation. On the one hand, it’s good. Samsung and Sony claim that synthetic pixel enlargement is a blessing that allows you to get brighter pictures in low light conditions. On the other, it’s bad. Since gluing pixels does not always go smoothly. No, no, but in the pictures you can find artifacts in the form of irregular skin texture, smeared brickwork of the building or the crown of trees.

And what about shooting at 108 megapixels?

With super-resolution, the situation is comical. The fact is that shooting in 108 megapixels (or 40, 48, 64 megapixels) requires the inclusion of a separate mode in the smartphone interface. Moreover, often the desired button is hidden in the wilds of settings. This decision is either a consequence of the lack of demand of the regime, or deliberate enclosure of it from the user.

Both are easy to explain. Because in the resolution of 40 to 108 megapixels, taking pictures is pointless. Firstly, in this mode, all auxiliary algorithms are disabled: HDR, bokeh, night shooting and more. As a result, only landscapes in perfect light remain to be shot. Secondly, even when shooting in artificial 27 megapixels, the Xiaomi Mi Note 10 smartphone renders the image for two to three seconds. This is not much, but any delay in the photo shoot is bad. When shooting at 108 megapixels, the smartphone freezes longer. The processor is choked in processing a gigantic array of data. Thirdly, due to the still large amount of incoming information, the camera shutter works slowly. Therefore, it is advisable to photograph with a tripod.

For all three criteria, an ordinary user just sneeze. He wants to get a smartphone, press a button and get a beautiful shot. Without dancing with a tambourine.

Oh, the iPhone takes good pictures without dancing with a tambourine!

In general, there is a belief that the more treatments (especially at an early stage of formation) the image goes through, the further it is from the true one. This is a fine line, because in this case it is very easy to fall into subjectivity as some kind of militant audiophile who warms up the headphones before listening to FLAC. However, in the world of mobile photography, there are still objective reasons to agree with the statement above.

Last fall, store shelves burst with an abundance of 48-megapixel and even 64-megapixel smartphones. What Apple and Google responded with the announcement of 12-megapixel cameras. Are they idiots?

Not at all. First, in both cases, the pixel size of the matrices is almost twice as high as that of competitors. As a result, they cope with the capture of light, if not better, then definitely not worse. That is, physics is still deciding.

Secondly, the marketers of these companies do not take drugs and do not come up with unimaginable examples of using 108-megapixel photos. They ignore the big numbers and go the other way. Both companies put algorithms on artificial intelligence, which already at the post-processing stage turn photos into almost a work of art. And this is exactly what a simple user wants – take a picture and get beauty. No tripod, no settings, no waste of time.

Source: life.ru